Crawl Budget: How to Find It in Google Search Console and Its Use Cases

Hey there! If you're diving into the world of SEO, you've probably come across the term "crawl budget."

But what exactly is it, and why should you care? Let's break it down and see how you can use Google Search Console to keep tabs on it.

What is Crawl Budget?

Crawl budget is the number of pages Googlebot crawls and indexes on your website within a given timeframe. Essentially, it’s the amount of attention Google gives to your site.

For large websites, understanding and optimizing your crawl budget is crucial because it can impact how well your pages are indexed and ranked.

Why is Crawl Budget Important?

It Ensures Important Pages are Indexed: If your site has thousands of pages, you want to make sure Google focuses on the most important ones.

It Improves SEO Performance: Efficient crawling helps in quicker indexing, which can lead to better rankings and visibility.

It Identifies Issues: Monitoring your crawl budget can help you spot issues like duplicate content or unnecessary URLs that may be wasting your crawl budget.

How to Find Crawl Budget in Google Search Console

Step 1: Access Google Search Console

First, log in to your Google Search Console. If you haven’t already set it up, make sure your site is verified in Google Search Console.

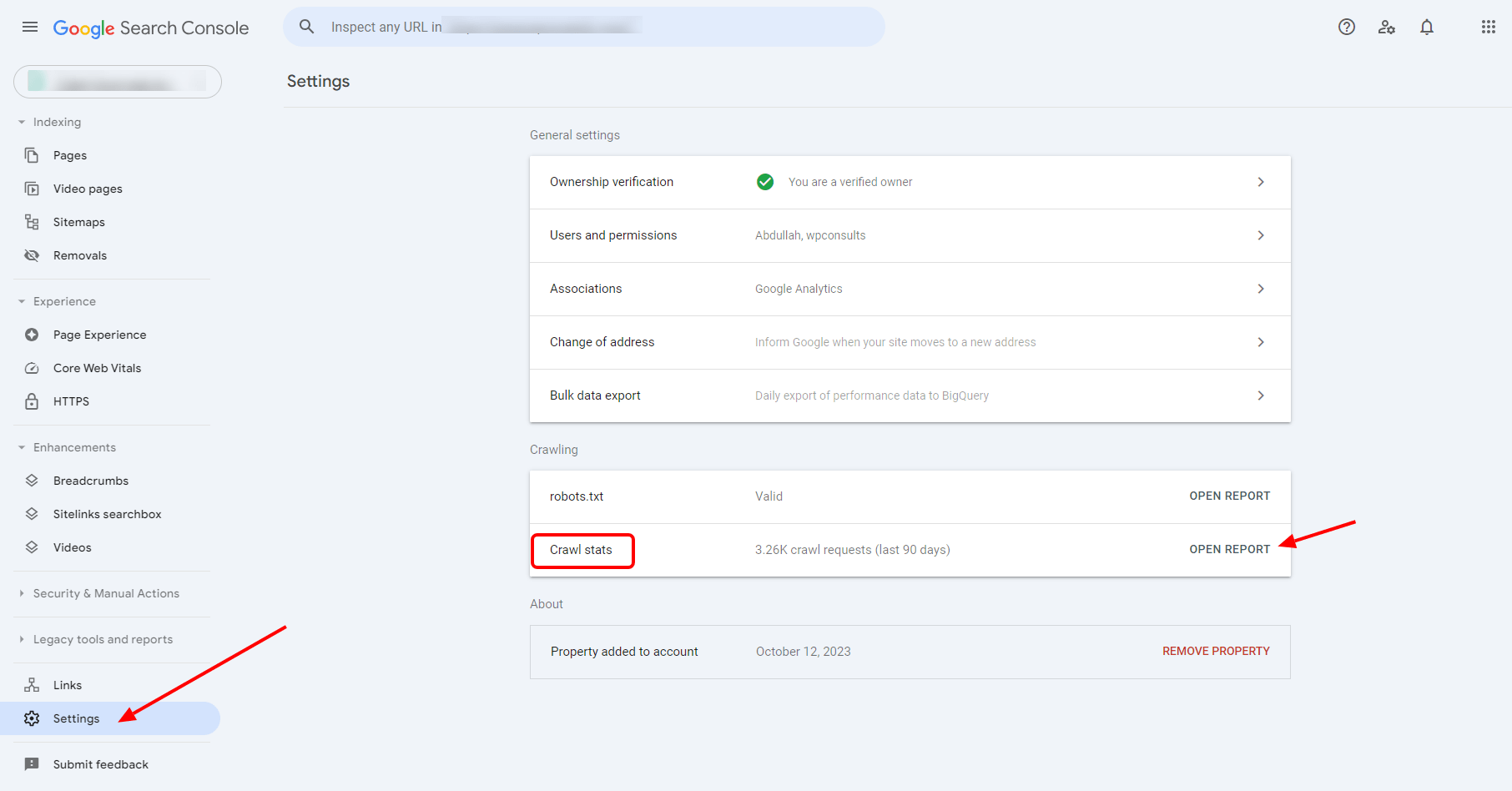

Step 2: Navigate to Settings

To get a detailed look at your crawl budget, go to the "Settings" tab. You will find it in the left-hand menu, scroll down to bottom then click on "Settings."

Step 3: Inspect Crawl Stats Report

Under "Settings," you'll find the "Crawl stats" section. Click on it to open the report.

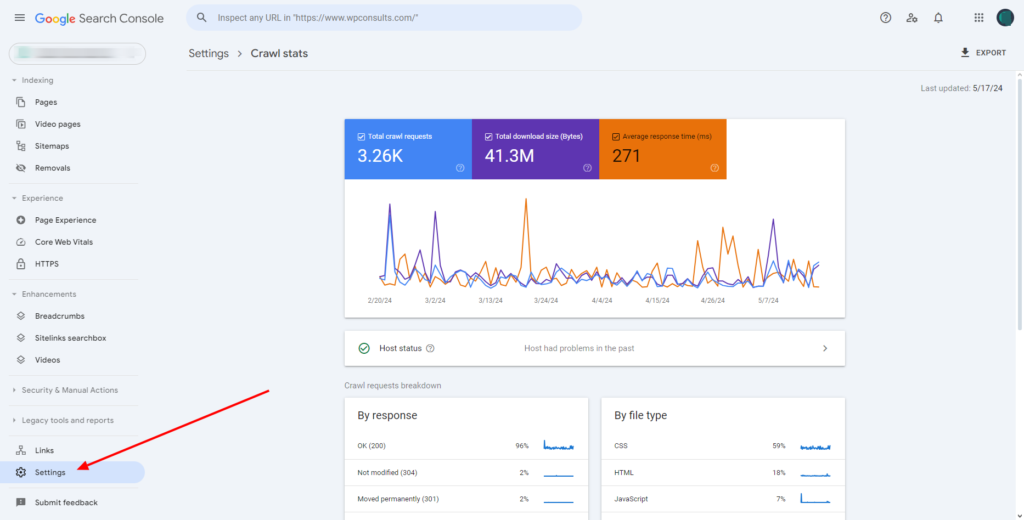

Here, you’ll see the total number of requests made by Googlebot over the last 90 days, the average response time, and more.

Analyzing Your Crawl Stats

In the Crawl Stats Report, you’ll see detailed information about Google’s crawling activity on your site, including:

Host Status: Shows if Google encountered any issues while crawling your site.

Total Crawl Requests: The total number of requests Googlebot made to your site.

Average Response Time: How quickly your server responded to crawl requests.

Requests Breakdown: Categorizes requests by response type (e.g., success, redirect, error).

This data helps you understand how Googlebot is interacting with your site and whether there are any issues affecting your crawl budget.

Use Cases for Monitoring and Optimizing Crawl Budget

1. Identify and Fix Crawl Errors:

If you notice a spike in 404 errors (page not found), it indicates that Google is trying to crawl non-existent pages. Fixing these errors ensures that Googlebot spends time on valuable pages instead.

2. Improve Site Speed:

Slow-loading pages can eat into your crawl budget. Use the data from the Crawl Stats Report to identify and optimize slow pages, improving overall crawl efficiency.

3. Manage Duplicate Content:

If Googlebot is spending time on duplicate content, it’s wasting your crawl budget. Use canonical tags and update your robots.txt file to direct Googlebot towards unique and valuable content.

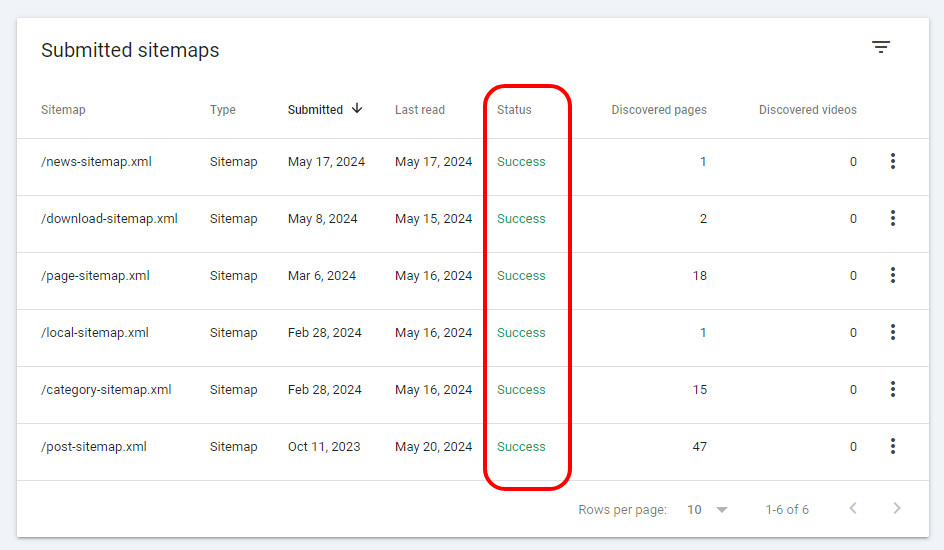

4. Optimize Your Sitemap:

A well-structured sitemap ensures that Googlebot can easily find and index your important pages. Regularly update your sitemap and submit it through Google Search Console.

Read More: How to Fix “Your Sitemap Appears to Be An HTML Page” Error [Proven]

5. Monitor Server Performance:

If your server response times are high, Googlebot might crawl fewer pages. Use the Crawl Stats Report to monitor server performance and make necessary improvements.

Practical Tips for Optimizing Crawl Budget

1. Check and Update Your Sitemap Regularly:

Make sure your sitemap is up-to-date and submitted to Google Search Console.

I have seen many websites completely vanished from search engines except their home page because of sitemap error that causes from a plugin issue.

The owners did not check regularly so they realize when the traffic become negligible, and they were searching for the issue.

So, my advice is to check the sitemap regularly, and fix or update if you notice any error.

2. Utilize Robots.txt Wisely:

Block Googlebot from crawling low-value pages using the robots.txt file.

3. Focus on Quality Content:

Create high-quality content that provides value to your visitors, encouraging Google to crawl and index your pages more frequently.

The content must not need to be a unique one rather not the same as other stated, try to find gap or problems then present to your visitor with proper solutions and that is providing value to your visitors.

Need Any Technical SEO Help?

Don't hesitate to contact us or email me for any technical SEO assistance.

Remember, a healthy foundation is obligatory for optimal SEO performance, so getting it right is essential.

Conclusion

Understanding and optimizing your crawl budget is a critical aspect of effective SEO. By using Google Search Console to monitor and manage your crawl stats, you can ensure that Googlebot crawls your site efficiently, leading to better indexing and improved search rankings.

Remember, a well-crawled site is a well-ranked site, so keep an eye on your crawl budget and make necessary adjustments for optimal performance.

![How to Fix “Your Sitemap Appears to Be An HTML Page” Error [Proven] How to Fix “Your Sitemap Appears to Be An HTML Page” Error [Proven]](https://www.wpconsults.com/wp-content/uploads/2024/05/Html-sitemap-errorl-768x432.png)