How to solve “Sitemap Couldn’t Fetch Issue” in Google Search Console: An In-Depth Guide

Follow My blog on Bloglovin

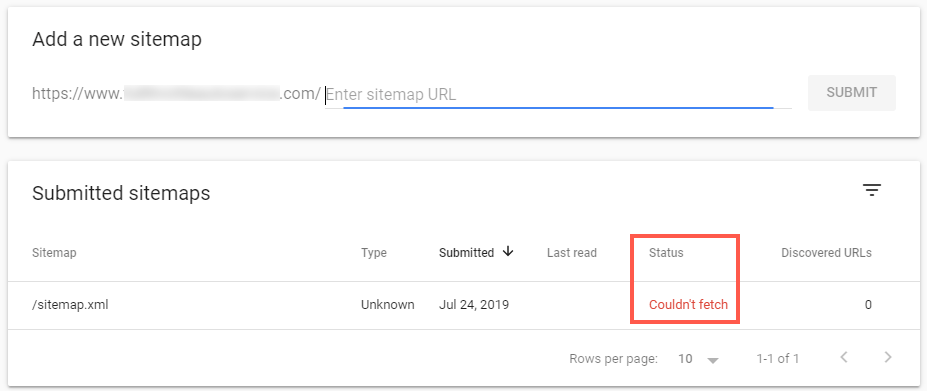

The "sitemap couldn't fetch" error in Google Search Console can be a frustrating obstacle for webmasters and SEO professionals.

This comprehensive guide will walk you through the common causes of this issue and provide advanced solutions to ensure your sitemap is properly crawled and indexed by Google.

Understanding the Problem

When Google Search Console reports that it "couldn't fetch" your sitemap, it means that the search engine's crawlers were unable to access or process the sitemap file you've submitted. This can have several negative impacts on your site's SEO:

- Reduced crawl efficiency

- Delayed indexing of new or updated content

- Potential loss of organic search visibility

- You forgot to create sitemap

Common Causes and Advanced Solutions

1. No Sitemap Exists

Problem:

The most fundamental cause of a "sitemap couldn't fetch" error could be that no sitemap has been created for the website yet.

Solutions:

- Check if a sitemap actually exists at the URL you've submitted to Google Search Console. enter in the search bar: https://yourwebsite.com/sitemap.xml

- If no sitemap exists, create one using one of the following methods:

- Use your Content Management System's built-in sitemap generation tool (many modern CMS platforms have this feature).

- Use online sitemap generator tools for small to medium-sized websites.

- Develop a custom sitemap generation script for larger, more complex websites.

- Manually create a sitemap for very small websites (not recommended for larger sites).

- Ensure the sitemap follows the correct XML schema as defined by sitemaps.org.

- After creating the sitemap, place it in your website's root directory or a logical subdirectory.

- Submit the new sitemap URL to Google Search Console.

2. Server Configuration Issues

Problem:

Your server may be blocking Google's crawlers or responding with errors.

Advanced Solutions:

- Implement IP-based access control lists (ACLs) to ensure Google's IP ranges are allowed.

- Configure your server to handle high crawl rates without triggering security measures.

- Use log analysis tools to identify and debug server responses to Googlebot requests.

3. Robots.txt Restrictions

Problem:

Your robots.txt file might be inadvertently blocking access to your sitemap.

Advanced Solutions:

- Implement a staged robots.txt strategy using environment-specific configurations.

- Use regular expressions in your robots.txt to create more granular crawl rules.

- Leverage the

Sitemap:directive in robots.txt to explicitly declare sitemap locations.

4. Sitemap Format and Structure Issues

Problem:

Your sitemap may not adhere to the proper XML schema or contain invalid URLs.

Advanced Solutions:

- Implement dynamic sitemap generation tied to your content management system (CMS).

- Use XSLT to transform complex data structures into valid sitemap formats.

- Implement pagination for large sitemaps (>50,000 URLs) using sitemap index files.

5. URL Consistency and Canonicalization

Problem:

Inconsistent URL formats or improper canonicalization can confuse crawlers.

Advanced Solutions:

- Implement URL normalization at the server level using URL rewriting rules.

- Use the

linkelement withrel="canonical"to explicitly define preferred URL versions. - Leverage the

hreflangattribute for internationalized content to prevent duplicate content issues.

6. Performance and Timeout Issues

Problem:

Slow server response times can cause fetch attempts to time out.

Solutions:

- Implement caching strategies specifically for sitemap files to reduce generation time.

- Use CDNs or edge caching to serve sitemaps from geographically distributed locations.

- Optimize database queries used in dynamic sitemap generation to reduce load times.

7. HTTPS and SSL Certificate Problems

Problem:

Invalid or expired SSL certificates can prevent secure fetching of sitemaps.

Solutions:

- Implement automated SSL renewal processes to prevent expiration.

- Use HSTS (HTTP Strict Transport Security) headers to enforce HTTPS connections.

- Implement proper SSL/TLS version support and cipher suites for optimal security and compatibility.

Advanced Troubleshooting Techniques

- Log Analysis: Use tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk to analyze server logs and identify patterns in Googlebot's crawl behavior.

- Network Monitoring: Implement packet capture and analysis to diagnose issues at the network level, ensuring proper communication between your server and Google's crawlers.

- Synthetic Monitoring: Set up external monitoring services that simulate Googlebot requests to proactively identify fetch issues before they impact your Search Console reports.

- API Integration: Leverage the Google Search Console API to programmatically submit sitemaps and monitor fetch status, allowing for automated reporting and issue detection.

- A/B Testing Sitemaps: Implement a system to serve different sitemap versions to Googlebot, allowing you to test and optimize sitemap structures without impacting live search results.

Conclusion

Resolving the "sitemap couldn't fetch" issue requires a multifaceted approach that addresses server configuration, content structure, and performance optimization.

By implementing these advanced solutions and leveraging sophisticated troubleshooting techniques, you can ensure that your sitemaps are consistently accessible to Google's crawlers, thereby improving your site's search engine visibility and overall SEO performance.

Remember that sitemap optimization is an ongoing process. Regularly monitor your Search Console reports, stay informed about changes in Google's crawling and indexing algorithms, and be prepared to adapt your strategies as needed to maintain optimal performance.

Follow my blog with BloglovinDiscover more from WpConsults

Subscribe to get the latest posts sent to your email.

![How to Fix “Your Sitemap Appears to Be An HTML Page” Error [Proven] How to Fix “Your Sitemap Appears to Be An HTML Page” Error [Proven]](https://i0.wp.com/www.wpconsults.com/wp-content/uploads/2024/05/Html-sitemap-errorl.png?fit=768%2C432&ssl=1)